Quality Auditing

During the design

phase auditing will be undertaken by the Project Quality

Engineer to ensure that the design output conforms to the

design input requirements.

Design verification should be planned and performed and

the results fully documented as part of the design process.

Depending on the programme of work this will be

undertaken as follows: -

-

As part of the Project Team with attendance at Design

Reviews.

-

At a salient point in the project's development, by

checking calculations and comparing new design with

company standards and similar proven designs. This may be

undertaken on completion of design before manufacture and

delivery.

-

As a periodic project audit.

This is to ensure that the required Procedures and

Standards for work are adopted.

Typical audit

process for a system audit.

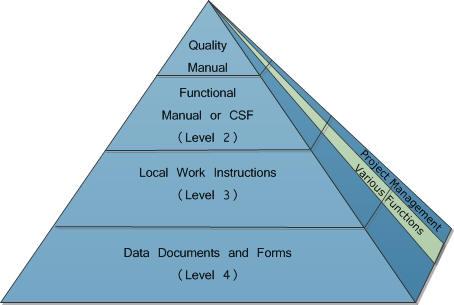

Documentation Control

A key functions of Quality is to define the

documentation structure and the documentation style guides,

document format can best be achieved through the use of

templates.

The various functional process are verified

by audit against the chosen quality methodology.

Workmanship Standards

Workmanship Standards for manufacture will be in accordance

with CMMI Ltd Quality Assurance Workmanship Standards Manual.

These standards will be modified and amended in line

with the general requirements for workmanship required by

a customer. Where a

contract requires specific criteria for manufacture the

Workmanship Standards Manual may be further expanded by any of

the following and identified in the associated Quality Plan.

Inspection

Inspection is

undertaken in accordance with the standards for manufacture.

When deemed more appropriate on a programme of work

specific standards for production and installation inspection

and testing requirements may be identified by a Quality Plan.

Inspection and testing shall be performed throughout

the manufacturing and integration stages.

The inspector or test engineer is responsible to ensure

that the equipment used for inspection and test is in

calibration and is electrically safe.

Inspection by

operators, automated inspection gauges, lot sampling, or first

inspection and test and any other type of inspection shall be

employed in any combination desired.

This will be assessed as to proficiency to ensure

product quality and integrity.

Certain chemical, metallurgical, biological, sonic,

electronic and radio-logical processes are so complex in

nature that specific work instructions shall be produced.

These will identify specific environment, certification or

monitoring requirements.

The level of inspection will be adjusted to tighten or

lesson the type of inspection and amount dependant on risk

analysis.

Records of

inspection shall be recorded on the Process Control Record by

Quality Assurance.

Inspection of sub-assemblies that cannot be inspected later

shall be adequate to reflect conformance with its

specification requirement.

In the case where inspection is undertaken off-site for

which a Process Control Record or Goods Inwards Note is not

applicable, a Strip and Investigation Report OP10SIR1.DOC may

be used for formal hardware evaluation.

Testing

Unless projects'

needs require otherwise, the basic set of test specifications

comprise Hardware Integration Test Specifications, Subsystem

Integration Test Specifications, Factory Acceptance Test

Specifications and Site Acceptance Test Specification.

Production test specifications are usually produced by the

relevant equipment supplier. In general, test

specifications contain the detailed information needed to

carry out the tests that were identified in the test plan.

This includes the instructions for setting up and

conducting the tests, and the methods for analysing the

results. If

particular adaptation data settings are required prior to or

during a test, these may be listed in an annex or appendix.

All test

specifications should be written such that they provide

repeatability and that they could be run by someone with a

reasonable knowledge of the system under test.

It is neither practical nor sensible to try to make the

specifications so detailed that they could be run by a

non-technical person.

However, it is likely that FAT and SAT specifications

will need to go down to a greater level of detail because

customers are often actively involved in the conduct of these

tests.

Nevertheless, the aim when writing any specification should be

to avoid excessive or unnecessary detail, with a view to

keeping documentation costs to a minimum. Test

specifications should be produced by Engineering approved by

QA and identify the

-

Product

to be tested.

-

Applications

to be performed.

-

Who the users are and their requirements

-

Product function and performance requirement.

-

Software or equipment versions for testing.

-

Environmental and test equipment needed inc. any special

tools required for testing.

-

Documentation required to undertake testing

-

Method results shall be recorded i.e. electronically, test

result sheets etc. and how they shall be reported.

-

Reliability Requirements.

-

Serviceability acceptable during the testing during

testing.

Including policy on software changes and impact to test

results.

-

Test programs that must be developed.

-

Safety considerations.

-

Location of testing.

-

Test staffing requirements inc. subcontracted work.

-

Schedule requirements.

-

Sample size for testing.

-

Level of accuracy required/tolerance

-

It is necessary to ensure that supplier FATs include a

period of time to undertake product QA.

-

Dependencies.

-

Hardware

-

Software

-

Equipment

-

Personnel.

Quality Assurance

will be responsible for review and agreeing all test

specifications and test schedules produced by CMMI Ltd.

Test Specifications may be formulated in two parts

-

Part 1, Production Acceptance Specifications

-

Part 2, Production Test Schedules.

Where Acceptance Test Specifications are written by

major sub-contractors, specifications will be written in a

format to be agreed by CMMI Ltd.

The results of all testing undertaken be CMMI Ltd

will be recorded on a Test Result Sheet OP10TRS1.DOC and

completed results filed in the Quality Assurance Project

file.

Test Engineering

shall develop test procedures and identify test points in

production. The

requirements in NES 1018 Requirements for Testing and Test

Documentation and DEF-STAN 00-52,

may be used as a guide to test specification criteria.

Appropriate templates for HIT, SIT FAT and SAT

specifications are available as local Work Instructions.

The Test

Preparations section contains any information that is

relevant to the whole test rather than specific to one

particular test case.

It may also include information about pre- and

post-test activities.

Within this section details of any hardware preparation

should be included which may cover some or all of the

following if applicable :

-

Any specific hardware to be used, by name and/or number.

-

A check for evidence of satisfactory calibration of test

equipment.

-

Any switch settings and cabling necessary to connect the

hardware - these shall be identified by name and location.

-

Diagrams to show hardware items, interconnections, and

control & data paths.

-

Precise instructions on how to place the hardware in a

state of readiness.

Similarly details

of software preparation are included.

This describes any actions that are required to bring

the software under test into a state in which it is ready for

testing. It may

also include similar information for test software and/or

support software.

Such information may include some or all of the following if

applicable :

-

The storage medium of the software under test.

-

The storage medium of any test or support software, e.g.

emulators, simulators, data reduction programs.

-

When

the test or support software is to be loaded.

-

Instructions, common to all test cases, for initialising

the software under test and any test or support software.

-

Instructions for loading and configuring any COTS software

products.

-

Common adaptation data to be used.

Initialisation supplements, on a per test case basis,

the information given in the Test Preparations section

described above.

Where applicable the following information may be included:

-

Hardware and software configuration.

-

Flags, pointers, control parameters to be set/reset prior

to test commencement.

-

Pre-set or adaptable data values.

-

Pre-set hardware conditions or electrical states necessary

to run the test.

-

Initial conditions to be used in making timing

measurements.

-

Conditioning of the simulated environment.

-

Instructions for initialising the software.

-

Special instructions peculiar to the test.

Test

Inputs provides the following information where

applicable :

-

Name, purpose and description of each input.

-

Source of the input.

-

Whether the input is real or simulated.

-

Time or event sequence of the input.

Test

Procedure his contains the bulk of the test

specification including the step by step instructions.

These take the form of a series of individually

numbered steps listed sequentially in the order in which they

are to be performed.

The following types of paragraph should be included in

each test case :

-

Introductions and explanations of the test case (in normal

type face).

-

Explanation of a test step (in normal type face).

-

Test operator actions and equipment operations required

for a test step (in bold type face).

-

Expected result or system response (in italic type face).

Note that a result box may be provided in the right

hand margin; during conduct of the test, this box may be

used to hold a tick if the step is a simple pass, a number

or value if a measurement is required, or a reference to

an observation if the step fails or causes an unexpected

side effect.

Prior to test

conduct, a Test Readiness Review (TRR) will be convened to

ensure adequate preparation for formal testing.

At this review, the following shall be considered:

-

Scope of the test (test specification sections to run,

IO’s to clear etc.).

-

Adequacy of Test Specifications in accomplishing test

requirements.

-

Review of the results of previous runs of the test

specification.

-

Hardware status (hardware buildstate reference).

-

Software status.

-

Hardware and software buildstates.

-

Any test tools or external emulators to be used (version

numbers etc.).

-

Test roles and responsibilities.

-

Status of outstanding observations.

-

Go/Nogo decision.

This is a

formal meeting, chaired by the Project Manager or

nominee and its outcome and points are recorded in the Test

Result Sheet page 1 before testing.

A Test Readiness Review would normally be held a few

days before the planned start date of a test.

However, in some instances, with the agreement of all

parties concerned the TRR can be held immediately prior to the

test.

The items

selected for final inspection will be as a result of the

criticality and likelihood of being found faulty.

Sampling inspection may be adequate in some

circumstances.

Testing of all

equipment will be recorded on a Test Result Sheet

OP10TRS1.DOC. The

Engineer is responsible for ensuring that the equipment used

for testing is calibrated and recorded.

Testing should be conducted in the presence of Quality

Assurance who will sign the Test Result Sheet.

In instances where the test engineer is known to have

the right experience and the testing is low risk, with

agreement of Quality Assurance the engineer may undertake

testing independent of Quality Assurance oversight.

The test result sheets

will then be signed by the engineer and presented to Quality

Assurance for checking and signing.

The Test Result

Continuation Sheet OP10TRC1.DOC is to be used to record

specific criteria of the test specification.

Test description and results must be recorded when

measured values are taken and may be used for subsequent

analysis, or ambiguity would otherwise result.

A number of paragraphs can be recorded on one line

providing the result is to specification.

A new line in the test result sheet is to be used to

record each failure or required change to the specification.

Where testing

identifies an error in the test specification, this must be

recorded in the column identified 'spec change'.

Having recorded that a specification change resolves

the problem and recorded in the remark's column, the

subsequent result may then be marked as a pass.

All failures and specification changes must be recorded

and the relevant test specification paragraph identified.

No product shall be despatched until all the activities

specified in the Quality Plan or documented procedures have

been satisfactorily completed and the associated data and

documentation is available and authorised.

The factory

acceptance test is a high level functional test of the

complete system to demonstrate the overall operation of the

system without testing any specific part.

It should be written so that it can be performed by a

third party with limited knowledge of the system.

Where site acceptance is not planned for this testing

may involve Customer Acceptance.

CMMI Ltd Quality

Assurance shall be represented at all trials, installation,

commissioning and setting to work of any equipment where

Quality aspects are required to be assessed.

The objective of Site Acceptance Testing is to prove a

functional test of the system operations in the desired

environment with live data.

It shall demonstrate the systems operation with

required interfaces. Testing at site will be to Final

Acceptance Test Schedules agreed with the Quality Engineer and

to the same criteria and procedures as in house testing.

The Quality Assurance Manager may, at his discretion,

delegate such tasks to a member of the trials, installation or

commissioning team.

During system

integration it is important that regression testing is carried

out to ensure that changes and enhancements have not adversely

affected parts of the system that have previously been tested.

Whilst it is not possible to precisely define a set of

rules for regression testing, the intention should always be

to cover as wide a range of functions as possible with

particular emphasis being placed on areas that are likely to

have been affected.

Informal

regression testing is usually performed utilising only the

test engineers knowledge of the system and intuitive skills

based on known problem areas of previous systems. It is

usually conducted without formality or repeatability in mind

as the primary objective is to gauge the ‘acceptability’ to

SITD of the software build for full system integration.

The result of

informal regression testing will one of :

-

Build is of sufficient quality to be released for system

integration and software team use.

-

Build is not of sufficient quality for system integration.

Areas of software requiring attention are flagged

to the software teams concerned.

Some criteria to

be applied during informal regression testing are as follows,

although this is not an exhaustive list.

Any ‘No’ answer would be addressed with the question

‘Would using this build with these faults further the

integration process?’.

In most cases it is reasonably obvious whether the

software is of an acceptable ‘quality’ however in case of

doubt the project Quality Engineer is the final authority.

-

Does the system stand up during normal use?

-

Do all the external interfaces work?

-

Do the major internal interfaces appear to work?

-

Is there any added functionality over the previous

integration build?

-

Is data displayed correctly on the screens?

-

Is the integrity of the data good (no obvious data

corruption)?

-

Is the system usable (e.g. a response time of 30 seconds

for each command may be deemed unusable for integration)?

-

Does this system allow integration to proceed?

If there has been

a significant change in functionality during the integration

phase, there may be a need to

re-run some or all of the integration tests.

The extent of the re-test will be decided in each

individual case by the project Test Manager.

In the event of a

problem during site acceptance it may be necessary to

undertake formal regression testing driven by the customer, or

in their absence in conjunction with QA, however the starting

point should be a selection of tests from the sub-system

integration test specifications that demonstrate a large

percentage of the functionality of the system.

To these should be added extra tests in areas where

software changes have been made since the previous formal

testing exercise.

Other points to

consider are as follows :

-

Are extra tests needed in areas of functionality where

historically problems have been encountered ?

-

Are specific performance tests appropriate considering the

latest changes to the software ?

-

Is a stability or soak test appropriate ?

-

Should tests with specific failure conditions be included

?

-

Could tests of functional areas that have shown no faults

for some period of time be left out ?

|